Analysis proves patience is needed to properly measure the impact of a grant

Posted on 05 Mar 2026

Posted on 01 Sep 2025

By Jen Riley, chief impact office, SmartyGrants, and Dr Liz Branigan, knowledge and practice director, Philanthropy Australia

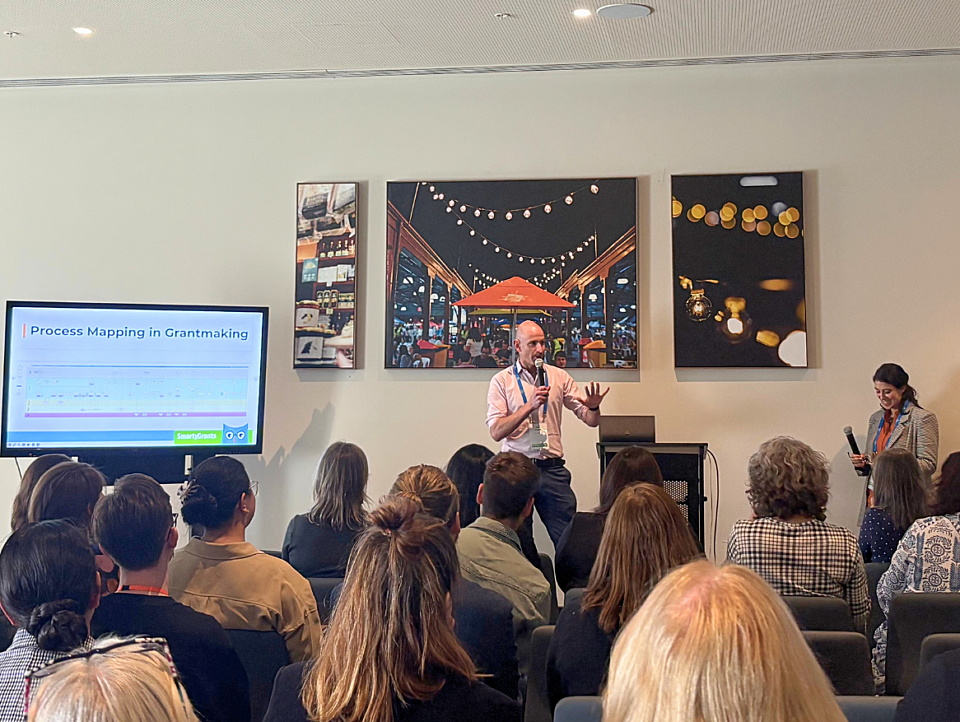

One of the key lessons from the recent Grant Impact Forum in Melbourne was that Australia’s leading evaluation experts are more interested in learning than in winning arguments.

Some of the nation’s most accomplished evaluators and grantmakers came together at the inaugural forum in June, hosted by SmartyGrants and Learning and Evaluation for Australian Funders (LEAF).

A highlight of the forum was a panel session on evidence, accountability and learning, moderated by Clear Horizon founder Dr Jess Dart. The session brought together the formidable wits of former RMIT University Professor of Public Sector Evaluation Patricia Rogers and Australian Centre for Evaluation (ACE) managing director Eleanor Williams.

The conversation had been highly anticipated, with many in the audience bringing their imaginary popcorn. In 2023, Rogers published a pointed critique of ACE’s methods, questioning its emphasis on randomised controlled trials (RCTs) as the default approach for assessing government policy impact.

While ACE was established to strengthen evaluation in the Australian Public Service, Rogers warned that privileging RCTs as the “gold standard” risked marginalising other valuable methods of evaluation, particularly those suited to complex systems and diverse communities.

Her critique struck a nerve in the evaluation community, challenging not just methodological preferences but deeper assumptions about whose knowledge counts, how rigour is defined, and the politics behind evidence-based policymaking.

The article became a flashpoint, fuelling debate at the Australian Evaluation Society conference and sector-wide discussions about balancing scientific credibility with contextual relevance, equity and Indigenous knowledge.

But the expected clash of ideologies gave way to something more constructive. The panel offered open, thoughtful dialogue and explored many of the field’s big questions, including:

“We are not just interested in ticking the boxes. We want to see people thinking about what really matters here, and how we can genuinely learn,” Rogers said.

Rather than a battle, what unfolded was a deepened understanding of shared concerns, and the emergence of some subtle but meaningful differences of emphasis.

Both Williams and Rogers expressed strong commitment to pluralist, fit-for-purpose evaluation approaches. However, their views on the role of evidence reflected the different institutional contexts in which they operate.

Dart’s facilitation helped frame the session as a model for how the sector can engage constructively on complex issues of evidence, power and evaluation paradigms.

“There is sometimes this default that you do a theory of change that actually has no theory… Rather than saying, what is the narrative about change? What do we really need to get better evidence on?”

The panel began with an agreement that grantmakers seek evidence not just for compliance, but to better understand what works.

Yet too often, Williams said, this leads to formulaic responses, such as allocating a fixed percentage of funding to evaluation, without reflecting on its purpose or relevance.

“It really is a bit more of a thought exercise than that. To say, what question are we trying to ask and answer here? That’s the real job,” she said.

Williams described the tension between ambitious evaluation expectations and the day-to-day realities of grant delivery. In the case of smaller grants, heavy-handed evaluation requirements can undermine delivery and distract from impact.

She recalled one case where a volunteer-run sporting club, funded to build a kiosk, was asked to demonstrate that the kiosk strengthened community cohesion, a task few professional evaluators could tackle credibly.

“If you just showed you built the kiosk, people are using it, people spend time there, that is probably enough demonstrating,” Williams said. “We have got to always stand back and think, what does genuine, fit-for-purpose look like, and what’s the burden we’re placing on the people we’re trying to support?”

Rogers agreed, warning against unrealistic demands for proof that may distort grantee behaviour or produce unreliable results.

Dart invited Rogers to discuss evidence at larger scales, such as the evaluation of portfolios or systems-change efforts.

Rogers responded with her “bridge, not Lego blocks” metaphor. She described the flawed assumption that evaluations can be built project-by-project, then stacked together to produce a whole.

“The default I sometimes see is… you have got this massive thing that just destroys the projects, and actually is not that useful,” she said.

She advocated for a consistent, light-touch layer of data collection across a portfolio, supplemented with closer examinations of priority areas where richer insights are needed.

Williams agreed with the approach but offered an important nuance: in public service contexts, decision-makers often privilege quantitative evidence. Pluralist approaches are critical, but expectations of measurable outcomes remain strong.

This was one of the few points where differences surfaced. Rogers leant towards challenging entrenched evidence hierarchies. Williams focused on navigating them pragmatically.

The panel strongly supported improving accountability, suggesting funders too often treated it as “a narrow exercise” focused on whether grantees did everything they said they would and met all their targets.

This approach, they agreed, distorts evidence and undermines learning.

Williams shared an example from an early learning grants program. “Throughout implementation, grantees were saying, ‘We are not quite on track.’ But then at 12 months, they all magically came on track. And I thought, that cannot be right. That is not helping us.”

Rogers argued that auditors, not evaluators, should carry primary responsibility for financial accountability.

“Auditors do a much better job around accountability; they actually look at where the money goes. Maybe we should just ease back on what we expect evaluation to do… and talk more about accountability for learning and effective management,” she said.

Dart added: “We should be deeply interested in what does not work.”

Williams agreed, while noting that formal accountability pressures remain high in government. Changing that culture, she said, requires leadership.

The conversation turned to the conditions needed for learning, especially creating space for honest reflection on what is and is not working.

Cultural change is difficult, panellists said. Grantees may fear that admitting failure could jeopardise future funding. Boards and leaders often signal that only success stories are welcome.

The panel argued that a genuine learning culture requires leadership from all levels. Funders must model openness. Grantees need psychological safety. Evaluation frameworks must highlight learning, not just acquittals.

Rogers said, “There is sometimes this default that you do a theory of change that actually has no theory… Rather than saying, what is the narrative about change? What do we really need to get better evidence on?”

Williams added, “Unless senior leadership in government signals that this is valued, evaluators alone cannot shift the culture … leaders need to walk the talk.”

In response to a question on First Nations engagement, the panel emphasised the importance of respecting Indigenous knowledge and data sovereignty.

“Accountability for good management is, tell us about the evidence you are using… and that includes different types of evidence, like collaborative sense-making,” Rogers said.

Williams noted that hierarchies of evidence remain deeply embedded in some evaluation culture and practice. “There is no getting around that in some environments. But it is about how we open up the dialogue.”

She added, “We want to continue to meet those needs for accountability as traditionally understood, while also bringing in this sense of… how we can improve. These things do not need to live in separate worlds.”

Dart said the panel modelled a constructive way forward.

“It’s important to keep talking about fit-for-purpose evidence, rather than applying a blanket approach. Given that we are seeing more and more intersecting challenges and the need for systems-wide solutions, it’s going to be critical to broaden our definitions of rigour and evidence.”

She also called for openness about the values driving evaluation.

“These tensions raised in this panel aren’t new, but they are particularly pressing in grant evaluation, where ideological choices often go unnamed. We can’t pretend evaluation is neutral or that one size fits all.”

The panel gave the sector much to reflect on. It also raised enduring questions: how do funders and evaluators navigate the tension between constructivist and positivist paradigms? How do they honour multiple ways of knowing within rigid institutional frameworks? And who gets to define what counts as valid evidence?

These questions could not be resolved in a single forum. But they are essential and will require sustained, deliberate dialogue.

Conversations like this oneare a valuable step towards a shared vision for grant evaluation in Australia: one grounded in clarity, learning and respect.

Rather than defaulting to randomised controlled trials (RCTs) or fixed evaluation formulas, begin by asking: What do we need to learn? What approach is fit for purpose in this context?

Avoid the “Lego blocks” approach of aggregating project-level evaluations. Instead, design a light-touch framework across the portfolio, supported by targeted close examinations where deeper insight is needed.

See accountability not only as meeting targets, but as a commitment to honest reflection and adaptive learning – especially when things don’t go as planned.

Funders and public sector leaders can create space for richer insight by actively valuing qualitative data, community voice, and Indigenous knowledge systems.

Posted on 05 Mar 2026

Posted on 15 Dec 2025

A Queensland audit has made a string of critical findings about the handling of grants in a $330…

Posted on 15 Dec 2025

The federal government’s recent reforms to the Commonwealth procurement rules (CPRs) mark a pivotal…

Posted on 15 Dec 2025

With billions of dollars at stake – including vast sums being allocated by governments –grantmakers…

Posted on 15 Dec 2025

Nearly 100 grantmakers converged on Melbourne recently to address the big issues facing the…

Posted on 15 Dec 2025

The federal government is trialling longer-term contracts for not-for-profits that deliver…

Posted on 10 Dec 2025

A major new report says a cohesive, national, all-governments strategy is required to ensure better…

Posted on 10 Dec 2025

Just one-in-four not-for-profits feels financially sustainable, according to a new survey by the…

Posted on 10 Dec 2025

The Foundation for Rural & Regional Renewal (FRRR) has released a new free data tool to offer…

Posted on 08 Dec 2025

A pioneering welfare effort that helps solo mums into self-employment, a First Nations-led impact…

Posted on 24 Nov 2025

The deployment of third-party grant assessors can reduce the risks to funders of corruption,…

Posted on 21 Oct 2025

An artificial intelligence tool to help not-for-profits and charities craft stronger grant…