Feds flag human services grants shakeup

Posted on 15 Dec 2025

The federal government is trialling longer-term contracts for not-for-profits that deliver…

Posted on 27 Nov 2024

By Jen Riley, chief impact officer, SmartyGrants

As the chief impact officer at SmartyGrants, I’m always on the hunt for better ways to measure and improve impact, and a recent commentary from two of the world’s leaders in the field got me thinking.

With the sector juggling restricted grants, tight timelines, high-stakes acquittals, and a must-succeed narrative, it’s no wonder many organisations default to “polished” reporting.

In an article published in Singapore’s Straits Times, Dr Jean Liu and Maryanna Abdo of the Centre for Evidence and Implementation suggest that the global decline in giving to charities has coincided with declining trust in those institutions.

They suggest that the answer to that problem is “better data”.

They are not alone in the view. Their suggestion that better quality data will ensure funds are directed to the most effective solutions and away from activities that are less efficient is one repeated across the globe.

This argument seems good in principle. But demonstrating what truly works and what doesn’t is not straightforward.

It’s not about misleading – it’s about survival. It’s about trying to be seen as worthy of support in a system that often equates complexity with risk, a system that makes it genuinely hard to communicate impact with honesty, clarity, and confidence.

So we report what’s safe. We soften what’s tricky. And we miss out on the real gold: the insights that would help us do better.

My view is that “better data” is a red herring. While not-for-profits often reflect deeply on their work and know what is and what isn’t working, they are constrained in what they can share.

In one case, I learnt from a CEO that surprising new information had revealed that the CEO’s organisation should radically change how it accepted vulnerable new clients, and hire much more experienced staff for that process.

But when I asked the CEO why she wouldn’t share this discovery with others in the sector, she replied, “Admitting this looks like we don't know what we are doing."

In short, the organisation didn’t want to look bad to its funders.

Leaders fear that sharing what they’ve learnt, including failures, can tarnish a not-for-profit’s reputation and have financial repercussions.

Instead, not-for-profits feel compelled to overstate their success and under-report their struggles, out of fear that being too honest could result in reduced funding.

The pressure to present only positive outcomes leads to unrealistic reporting.

I’ve seen cases where data presented to funders was so consistently positive – including claims such as 100% achievement of outcomes – that while it was impressive, it was disconnected from reality. The pressure to present a flawless picture of success can distort the very data we rely on to build trust.

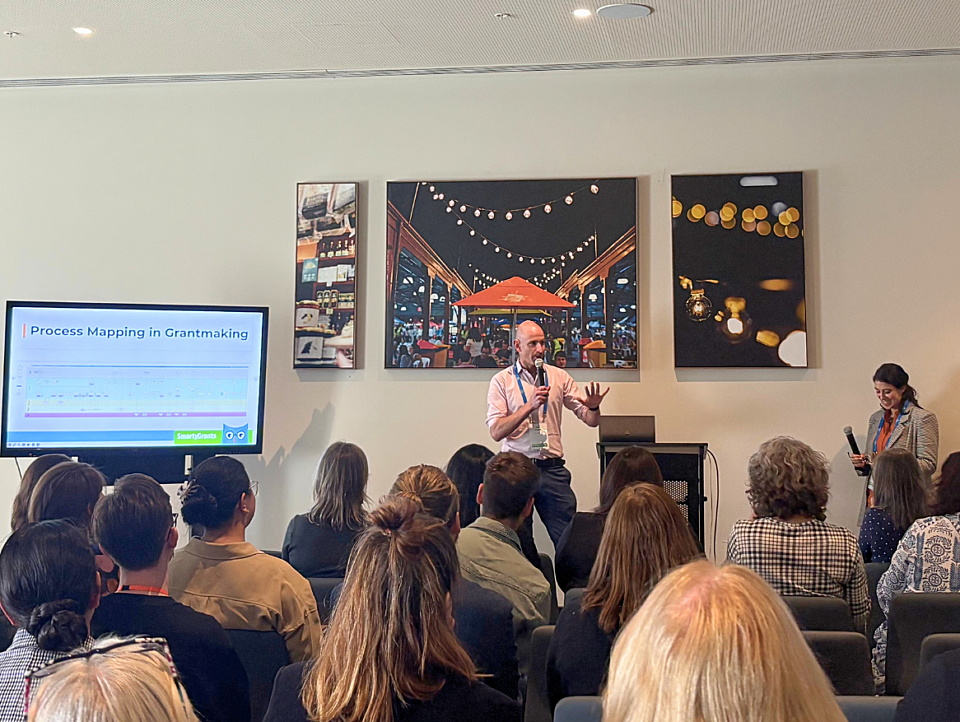

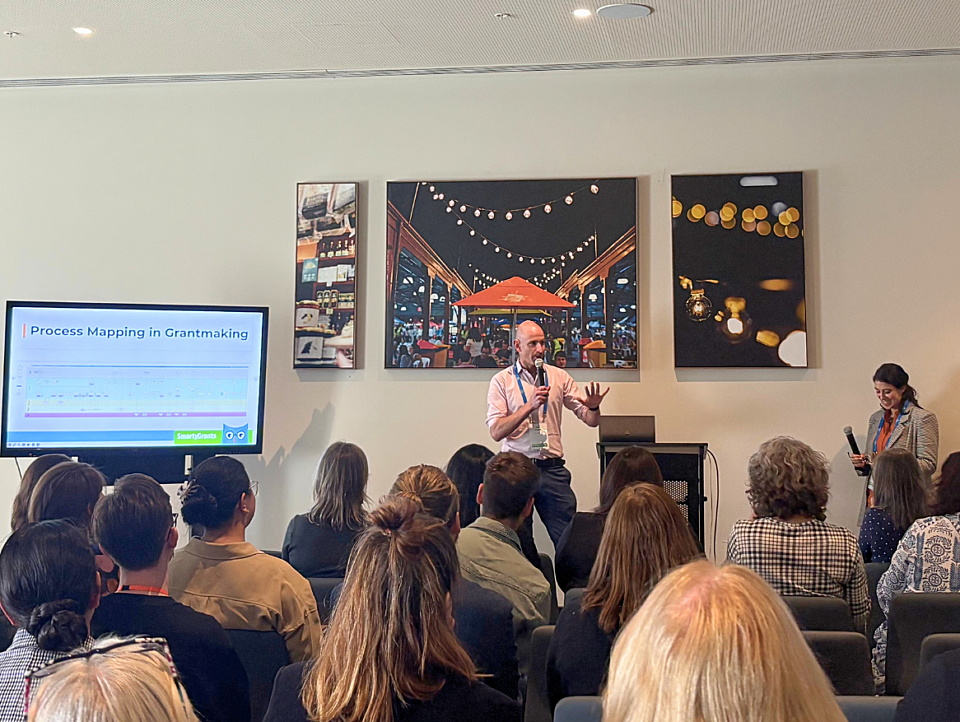

At a recent Queensland Council of Social Service (QCOSS) gathering, I shared this message with grantmakers and changemakers: we don’t need more data – —we need a better relationship with the data we already have.

If we want to shift the culture, we need to shift the conversation. That starts with reframing the way we talk about data.

Instead of “Subjective participant experience relating to interpersonal safety and dignity,” how about: “Did participants feel safe and respected?”

Instead of “Participant likelihood to recommend program to others,” try: “Would they tell a friend to come along?”

Plain language is not a downgrade. It’s an invitation – for your board, your team, your funders – to lean in and actually engage.

And when you combine the resulting data with context and a participant voice? That’s where real meaning lives.

“I walked in expecting to feel awkward and leave early. But I stayed the whole session. People asked my pronouns, used them, and actually listened. I didn’t know how much I needed that until it happened.” – Alex, 19

No bar chart can carry the kind of impact that Alex’s personal account does. Pair it with data, and it becomes a powerful insight, not just a number.

"Ultimately, the call for “better data” should be reframed as a call for “better culture” in which we foster a culture of learning, adaptation, and honest reflection."

To the funders reading this: ask good questions. Make room for grey areas. Reward curiosity."

I therefore understand the push by Australia’s Charities Minister, Andrew Leigh, for greater use of randomised controlled trials as a way of putting some scientific rigour around impact data. However, while RCTs can offer objective insights into some interventions, they are not a perfect fit for every social challenge. They require significant resources, can be slow to yield results, and are often difficult to apply to complex social issues where multiple factors are constantly in flux.

For example, in many communities, such the City of Greater Dandenong, which is home to a high number of refugees and asylum seekers, the systemic issues affecting outcomes such as post-conflict trauma, socioeconomic disadvantage and access to work rights make simple data points insufficient for understanding true impact.

Another example of the complexity of social problems can be seen in First Nations communities, where individuals are twice as likely as non-Indigenous individuals to die young from preventable causes. For example, factors such as “sorry business” associated with the death of family members can prevent participation in programs that might otherwise help close the gap in life expectancy.

These factors are not failures of the not-for-profit’s program, but reflections of life in such communities. Labelling such outcomes as failures oversimplifies the real challenges that community organisations face. It unfairly shifts the responsibility for systemic problems onto not-for-profits rather than acknowledging the social and economic context they operate in.

We often treat impact reporting like a finish line. But what if it were more like a feedback loop?

The organisations I see reporting their impact well are creating space for reflection. They’re experimenting with dashboards, yarning circles, even art. They’re designing reports for different audiences and using the 1-3-25 model to give everyone something they can actually use (one page of main messages, a three-page executive summary, and no more than 25 pages of findings).

They’re designing reports for different audiences, and using the 1-3-25 model to give everyone something they can actually use (one page of main messages, a three-page executive summary, and no more than 25 pages of findings).

Crucially, they’re transparent about their achievements and findings.

This isn’t a call-out. It’s a call-in.

To the funders reading this: ask good questions. Make room for grey areas. Reward curiosity.

To the not-for-profits: you’re doing hard work under complex conditions. Don’t be afraid to show what you’ve learned as well as what you’re achieved.

To all of us: let’s stop seeing data as a performance, and start using it as a tool for collective growth.

Because when we shift the culture of data – from fear to reflection, from proof to learning – we make it easier for everyone in the system to do better work.

For grantmakers who want more on impact without performance pressure, I recommend visiting simna.com.au and exploring how SmartyGrants is helping grantmakers to have braver conversations about data over through its new analytics tools.

Among the 223 submissions were statements from Australia’s top eight universities, supporting major changes to the ARC’s governance, role and focus.

The review led to significant changes to the ARC Act in July 2024, including new legislative objects, and to governance arrangements, including the establishment of an independent board.

“The goal, quite simply, is to enhance the way the ARC encourages and supports the very best, most creative research,” said ARC board chair Professor Peter Shergold.

Under the proposals outlined in the discussion paper, the ARC would simplify application processes, introduce faster funding decisions, and create six flexible grant categories to better support researchers across different stages and disciplines.

The reforms include the establishment of a dedicated stream to support Aboriginal and Torres Strait Islander researchers, and new programs to nurture early-career researchers through structured fellowships and leadership development opportunities.

The ARC also recommends a stronger focus on aligning research with national priorities, while protecting the vital role of investigator-led discovery research. A new grants category, “collaborate”, would fund cross-sector partnerships to drive innovation and social impact.

Consultation about the changes ended mid-April, and a final report is due to the government mid-year.

For more information about the review and discussion paper, visit here.

Jen Riley’s gave this summary of the value of impact data and the story that goes with it. She was speaking at the Gather for Good event in Melbourne in support of social enterprises. Source LinkedIn

The push for better data as a way to address declining trust in institutions oversimplifies these challenges. And it won’t build trust or improve outcomes if we don’t also create an environment where funders and not-for-profits can engage in honest, open communication about both successes and setbacks. Trust is built when not-for-profits can candidly share the obstacles they face, without fear of being penalised for reporting less-than-perfect results.

Gathering more data won’t solve any problems if that data is curated to obscure any hint of failure or complexity.

Many years ago, I worked for Oxfam, where a large percentage of funding was from the Australian public. This funding was referred to internally as “unrestricted”, as opposed to funding from government grants and bilateral donors, which was referred to as “restricted” – its use was limited to a particular program with acquittals attached. The dynamic of unrestricted funding (where we were not reporting back to funders) was associated with a robust monitoring, evaluation and learning culture whereby true accounts of outcomes emerged and programs adapted accordingly. This culture is missing inside organisations when 80–90% of funding is “restricted”.

A focus on “polished” data and evidence is a missed opportunity for genuine dialogue between not-for-profits, philanthropists and government agencies. Not-for-profits have deep insights into the communities they serve, and fostering open conversations about both successes and challenges could educate funders on the real nuances within these communities. Using data to generate “perfect” results is a missed opportunity for genuine learning.

Data can be a tool for educating funders on the complex social, economic and cultural factors at play. Using data in this way can shift the focus from simply measuring outcomes to understanding the deeper context that affects those outcomes, enabling more meaningful, long-term impact.

What not-for-profits really need is a culture where they feel safe to share their full range of experiences – both their successes and the systemic barriers that prevent them from fully achieving the outcomes they seek. We must avoid a situation in which funders want only positive stories, and we must create an environment of learning and improvement. Open, honest dialogue should be the goal.

Many of the funders I work with understand that not-for-profits operate in incredibly challenging environments, where outcomes may fall short for reasons beyond an organisation’s control. They are genuinely interested in hearing about both successes and setbacks. We need to break the current deadlock; otherwise, more data will simply lead to more polished, yet disconnected, success stories.

Ultimately, the call for “better data” should be reframed as a call for “better culture” in which we foster a culture of learning, adaptation, and honest reflection. Only in this way can we move beyond superficial metrics and work toward real, sustainable change.

Posted on 15 Dec 2025

The federal government is trialling longer-term contracts for not-for-profits that deliver…

Posted on 15 Dec 2025

A Queensland audit has made a string of critical findings about the handling of grants in a $330…

Posted on 15 Dec 2025

The federal government’s recent reforms to the Commonwealth procurement rules (CPRs) mark a pivotal…

Posted on 15 Dec 2025

With billions of dollars at stake – including vast sums being allocated by governments –grantmakers…

Posted on 15 Dec 2025

Nearly 100 grantmakers converged on Melbourne recently to address the big issues facing the…

Posted on 10 Dec 2025

Just one-in-four not-for-profits feels financially sustainable, according to a new survey by the…

Posted on 10 Dec 2025

The Foundation for Rural & Regional Renewal (FRRR) has released a new free data tool to offer…

Posted on 10 Dec 2025

A major new report says a cohesive, national, all-governments strategy is required to ensure better…

Posted on 08 Dec 2025

A pioneering welfare effort that helps solo mums into self-employment, a First Nations-led impact…

Posted on 24 Nov 2025

The deployment of third-party grant assessors can reduce the risks to funders of corruption,…

Posted on 21 Oct 2025

An artificial intelligence tool to help not-for-profits and charities craft stronger grant…

Posted on 21 Oct 2025

Artificial intelligence (AI) is becoming an essential tool for not-for-profits seeking to win…

Posted on 15 Dec 2025

The federal government is trialling longer-term contracts for not-for-profits that deliver…

Posted on 15 Dec 2025

A Queensland audit has made a string of critical findings about the handling of grants in a $330…

Posted on 15 Dec 2025

The federal government’s recent reforms to the Commonwealth procurement rules (CPRs) mark a pivotal…

Posted on 15 Dec 2025

With billions of dollars at stake – including vast sums being allocated by governments –grantmakers…

Posted on 15 Dec 2025

Nearly 100 grantmakers converged on Melbourne recently to address the big issues facing the…

Posted on 10 Dec 2025

Just one-in-four not-for-profits feels financially sustainable, according to a new survey by the…

Posted on 10 Dec 2025

The Foundation for Rural & Regional Renewal (FRRR) has released a new free data tool to offer…

Posted on 10 Dec 2025

A major new report says a cohesive, national, all-governments strategy is required to ensure better…

Posted on 08 Dec 2025

A pioneering welfare effort that helps solo mums into self-employment, a First Nations-led impact…

Posted on 24 Nov 2025

The deployment of third-party grant assessors can reduce the risks to funders of corruption,…

Posted on 21 Oct 2025

An artificial intelligence tool to help not-for-profits and charities craft stronger grant…

Posted on 21 Oct 2025

Artificial intelligence (AI) is becoming an essential tool for not-for-profits seeking to win…